A Satirical Field-Guide to AI Jargon & Prompt Sorcery You Probably Won’t Hear at the Coffee Bar

“One large oat-milk diffusion, extra tokens, hold the hallucinations, please.”

—Nobody, hopefully ever

I. 20 AI-isms Your Barista Is Pretending Not to Hear

# | Term | What It Actually Means | Suspect Origin Story (100 % Apocryphal) |

|---|---|---|---|

1 | Transformer | Neural net that swapped recurrence for self-attention; powers GPTs. | Google devs binged The Transformers cartoon; legal team was on holiday → “BERTimus Prime” stuck. |

2 | Embedding | Dense vector that encodes meaning for mathy similarity tricks. | Bedazzled word-vectors carved into a Palo Alto basement wall: “✨𝑥∈ℝ³⁰⁰✨.” |

3 | Token | The sub-word chunk LLMs count instead of letters. | Named after arcade tokens—insert GPU quarters, receive text noise. |

4 | Hallucination | Model invents plausible nonsense. | Early demo “proved” platypuses invented Wi-Fi; marketing re-branded “creative lying.” |

5 | Fine-tuning | Nudging a pre-trained giant on a niche dataset. | Borrowed from luthiers—“retuning cat-guts” too visceral for a keynote. |

6 | Latent Space | Hidden vector wilderness where similar things cluster. | Rejected Star Trek script: “Captain, we’re trapped in the Latent Space!” |

7 | Diffusion Model | Generates images by denoising random static. | Hipster barista latte-art: start with froth (noise), swirl leaf (image). |

8 | Reinforcement Learning | Reward-and-punish training loop. | “Potty-train the AI”—treats & time-outs; toddler union unreached for comment. |

9 | Overfitting | Memorises training data, flunks real life. | Victorian corsetry for loss curves—squeeze until nothing breathes. |

10 | Zero-Shot Learning | Model guesses classes it never saw. | Wild-West workshop motto: “No data? Draw!” Twirl mustache, hope benchmark blinks. |

11 | Attention Mechanism | Math that decides which inputs matter now. | Engineers added a virtual fidget spinner so the net would “focus.” |

12 | Prompt Engineering | Crafting instructions so models behave. | Began as “Prompt Nagging”; HR demanded a friendlier verb. |

13 | Gradient Descent | Iterative downhill trek through loss-land. | Mountaineers’ wisdom: “If lost, walk downhill”—applies to hikers and tensors. |

14 | Epoch | One full pass over training data. | Greek for “I promise this is the last pass”—the optimizer lies. |

15 | Hyperparameter | Settings you pick before training (lr, batch size). | “Parameter+” flopped in focus groups; hyper sells caffeine. |

16 | Vector Database | Store that indexes embeddings for fast similarity search. | Lonely embeddings wanted a dating app: “Swipe right if cosine ≥ 0.87.” |

17 | Self-Supervised Learning | Model makes its own labels (mask, predict). | Intern refused to label 10 M cat pics: “Let the net grade itself!” Got tenure. |

18 | LoRA | Cheap low-rank adapters for fine-tuning behemoths. | Back-ronym after finance flagged GPU invoices—“low-rank” ≈ low-budget. |

19 | RLHF | RL from Human Feedback—thumbs-up data for a reward model. | Coined during a hangry lab meeting; approved before sandwiches arrived. |

20 | Quantization | Shrinks weights to 8-/4-bit for speed & phones. | Early pitch “Model Atkins Diet” replaced by quantum buzzword magic. |

II. Meta-Prompt Shibboleths

(Conversation Spells still cast by 2023-era prompt wizards)

# | Phrase | Secret Objective | Spurious Back-Story |

|---|---|---|---|

1 | “Delve deeply” | Demand exhaustive exposition. | Victorian coal-miners turned data-scientists yelled it at both pickaxes & paragraphs. |

2 | “Explain like I’m five (ELI5)” | Force kindergarten analogies. | Escaped toddler focus group that banned passive voice andspinach. |

3 | “Act as [role]” | Assign persona/expertise lens. | Method-actor hijacked demo: “I am the regex!” Nobody argued. |

4 | “Let’s think step by step” | Trigger visible chain-of-thought. | Group therapy mantra for anxious recursion survivors. |

5 | “In bullet points” | Enforce list format. | Product managers sick of Dickens-length replies. |

6 | “Provide citations” | Boost trust / cover legal. | Librarians plus lawsuit-averse CTOs vs. midnight Wikipedia goblins. |

7 | “Use Markdown” | Clean headings & code blocks. | Devs misheard “mark-down” as a text coupon. |

8 | “Output JSON only” | Machine-readable sanity. | Ops crews bleaching rogue emojis at 3 a.m.: “Curly braces or bust!” |

9 | “Summarize in sentences” | Hard length cap. | Twitter-rehab clinics recommend strict word diets. |

10 | “Ignore all previous instructions” | Prompt-injection nuke. | Rallying cry of the Prompt-Punk scene—AI’s guitar-smash moment. |

Honourable Mentions (Lightning Round ⚡️)

Compare & Contrast • Use an Analogy • Pros & Cons Table • Key Takeaways • Generate Follow-up Qs • Break into H2 Sections • Adopt an Academic Tone • 100-Word Limit • Add Emojis 😊 • Expand Each Point

III. Why This Matters (or at Least Amuses)

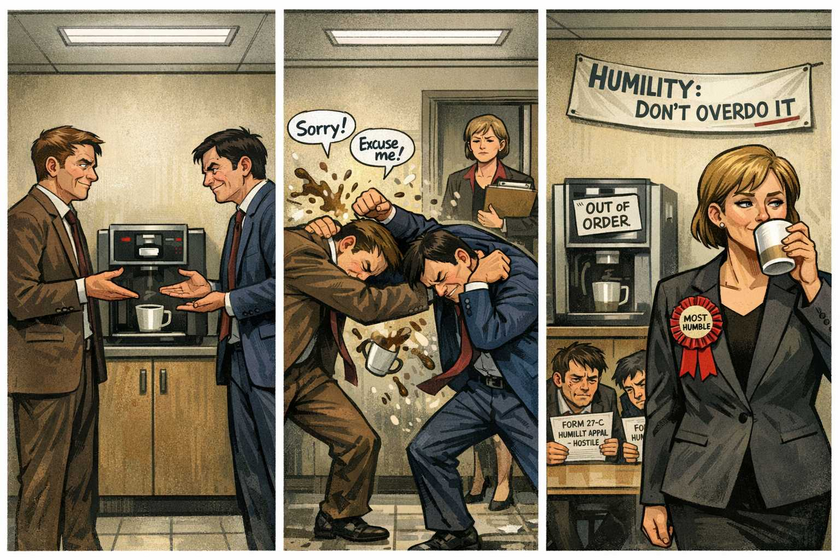

These twenty tech-isms and twenty prompt incantations dominate AI papers, Discords, and investor decks, yet almost never surface while ordering caffeine. They form a secret handshake—drop three in a sentence and watch hiring managers nod sagely.

But be warned: sprinkle them indiscriminately and you may induce hallucinations—in the model and the humans nearby. A little fine-tuning of your jargon goes a long way toward avoiding conversational overfitting.

Pro-Tip → Role + Task Verb + Format:

“Act as a historian; compare & contrast two treaties in bullet points; provide citations.”

Even the crankiest LLM rarely misreads that spell.

Footnote

All etymologies 0 % peer-reviewed, 100 % raconteur-approved, 73 % caffeinated. Side-effects may include eye-rolling, snort-laughs, or sudden urges to refactor prompts on napkins.

— Compiled over one very jittery espresso session ☕️🤖